The intersection of AI and blockchain is becoming a defining trend in Web3. While many dapps integrate AI, they rely on centralized infrastructure for model execution. Chromia is developing infrastructure that moves inference on-chain, building toward greater transparency and decentralization.

How Inference Works Today

When you run an AI model, you typically either host it on a cloud server yourself or use a third-party provider. For example, interacting with ChatGPT involves sending queries to models hosted on OpenAI’s servers. The core inference, the part where the model processes your input and generates output, happens on a cloud server.

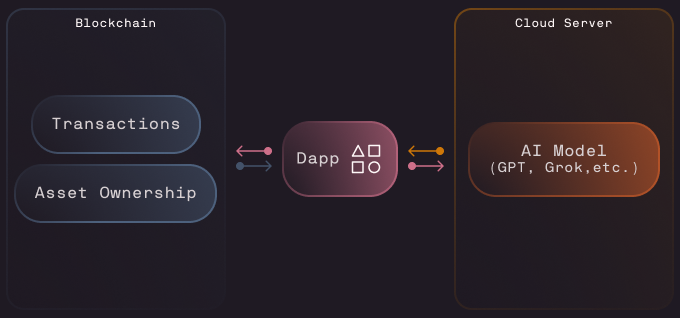

How This Relates to Dapps

An increasing number of dapps feature AI capabilities, but in practice, they offload the AI processing to external services. While blockchain handles transactions and tracks asset ownership, any AI-driven output is generated on a traditional server.

This creates a hybrid structure: some parts of the application are decentralized, but the actual inference is not.

What Is the Problem?

The biggest concern is transparency.

As an end user, you may not be able to verify the AI model being used, the version history, or whether the model has been modified (potentially introducing bias). As a developer, you face the same blind spots when relying on a model hosted by a third party.

There’s also a structural contradiction. A decentralized app that depends on centralized processing undermines its own design. If the goal of Web3 is to reduce reliance on centralized intermediaries, this is a core issue that needs to be addressed.

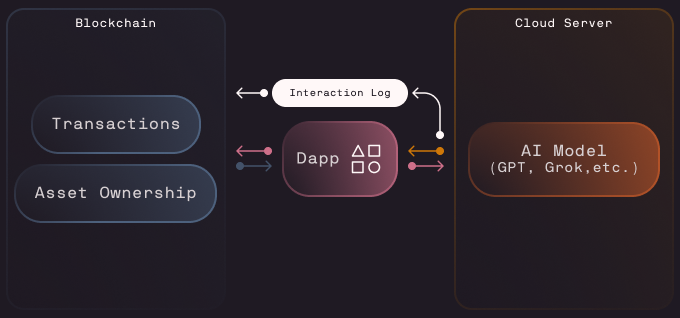

Using Blockchain as an Interaction Log

A transitional approach is to log AI-related interactions on-chain. This doesn’t eliminate centralization but adds a layer of transparency. For example, the Chromia Neural Interface extension allows developers to record AI agent inputs and outputs on-chain.

While this is a step in the right direction, it isn’t enough. Logging interactions on the blockchain increases transparency, but the model itself is still a black box.

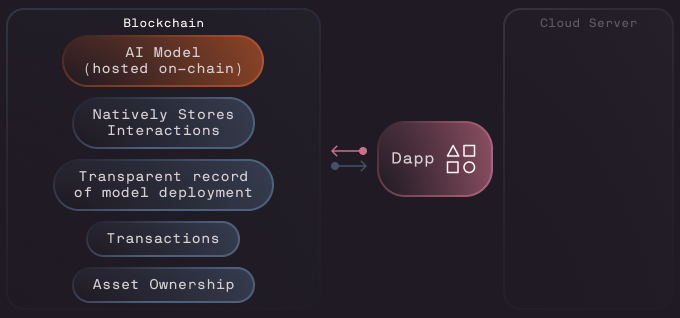

Chromia’s AI Inference Extension

Chromia has taken a first step towards solving this issue with the AI Inference Extension, developed in Q1. This framework allows developers to deploy LLMs (i.e. GPT, DeepSeek, Llama) that are hosted on Chromia provider nodes rather than cloud servers.

In Q2, we are focused on AI Inference Optimization, applying the Extension to deploy stable and performant models by increasing stability, improving resource allocation, and reducing latency for on-chain execution.

Our vision focuses on achieving full transparency in AI-enabled blockchain applications by hosting LLM models directly on-chain while natively storing interactions and deployment information. This approach will enable end-to-end visibility - something that current cloud based designs cannot provide.

Next Steps

We are currently using the AI Inference Extension to experiment with LLMs on an internal testnet. The framework is being refined to support additional models, and we are planning to release a public demo at some point in the future.

From the beginning, Chromia has focused on overcoming the limitations of traditional blockchain design and reducing reliance on centralized servers when building dapps. As our goals have evolved, this mission now extends to decentralized AI.

The AI Inference Extension and the Vector Database Extension are two key components of this broader vision. Follow along as we continue to push the boundaries!

About Chromia

Chromia is a Layer-1 relational blockchain platform that uses a modular framework to empower users and developers with dedicated dapp chains, customizable fee structures, and enhanced digital assets. By fundamentally changing how information is structured on the blockchain, Chromia provides natively queryable data indexed in real-time, challenging the status quo to deliver innovations that will streamline the end-user experience and facilitate new Web3 business models.

Website | Twitter | Telegram | Facebook | Instagram | Youtube | Discord